Calibration or adjustment?

Definitions of the International Vocabulary of Metrology

Metrology is the science of measurement and its applications. As in all fields, certain documents are essential to establish a common basis. Such is the case with the International Vocabulary of Metrology - Basic and General Concepts and Associated Terms (VIM). Established by a consortium of several international organizations, it is intended as a reference work. The formal definitions presented here are direct quotations and are not easily understood by non-metrologists. For this reason, a rewording of the formal definitions will be made after their presentation.

Calibration

Formal definition

Operation that, under specified conditions, in a first step, establishes a relation between the quantity values with measurement uncertainties provided by measurement standards and corresponding indications with associated measurement uncertainties and, in a second step, uses this information to establish a relation for obtaining a measurement result from an indication.

In other words...

Calibration is the act of comparing a measurement and its uncertainty with a standard and its uncertainty. A standard is used as a reference. Normally, during a calibration, it is desirable to use a standard whose uncertainty of measurement is smaller than that of the instrument under test.

The measurement uncertainty is the dispersion of values around the measured value. It consists of several components. It can be separated into type A and type B uncertainty. Type A is a statistical evaluation made from a series of measurements. It is here that the precision of the measurement is evaluated. Type B is any other type of evaluation based on information such as drift, noise, resolution, instrument limits, etc.

During a calibration, the experimental conditions must be rigorously defined. In addition, since it is a comparison between a measuring instrument and a reference instrument, it is necessary to know which points should be used for the comparison. Consider a calibration in carbon dioxide (CO2) concentration. The concentration of the gas varies with temperature and atmospheric pressure in a given volume. It is therefore important to determine these three constants before varying the gas concentration. Then, it is possible to check the measurement of the CO2 concentration at 1%, 5% and 10%, to see if the instrument under test gives an accurate reading at these different concentrations.

Here, the uncertainty is essential, because the calibration allows to establish if the measuring instrument gives a value within the specifications of the manufacturer. For example ± 0.05% of CO2.

The same principle is applied by the laboratories that issue a certificate of traceability to the SI. They compare a measuring instrument with a working standard whose uncertainty is established by a series of calibrations back to an international standard.

The calibration does not change the reading that the measuring instrument will give under the same conditions. It simply ensures that the instrument gives a value within the measurement uncertainty defined in its technical characteristics.

When we want to modify the reading, we talk about adjustment, in the International Vocabulary of Metrology.

Adjustment

Formal definition

Set of operations carried out on a measuring system so that it provides prescribed indications corresponding to given values of a quantity to be measured.

In other words…

Adjustment is the act of changing the value rendered by the measuring instrument. Not all instruments are adjustable. An outdoor toluene thermometer for example is not typically adjustable. However, it would be possible to calibrate it to see if it is rendering the outdoor temperature within the advertised range (perhaps ±2°C).

For an instrument to be adjustable, there must be a mechanism to correct the expected target value for some specific points. The adjustment is done over a given reading range, at certain points. The more points there are, the more ideal the adjustment will be.

Following an adjustment, it is imperative to perform a second calibration to ensure that the instrument respects the manufacturer's technical specifications despite the modification.

Is the adjustment always necessary?

If the measuring instrument returns a value within its measurement uncertainty, most calibration laboratories will not make the adjustment. They will simply deliver a certificate of conformity.

If the measuring instrument returns a value and uncertainty of measurement exceeding the expected value, it is appropriate to make an adjustment, as long as the instrument allows it.

Examples of calibration certificates

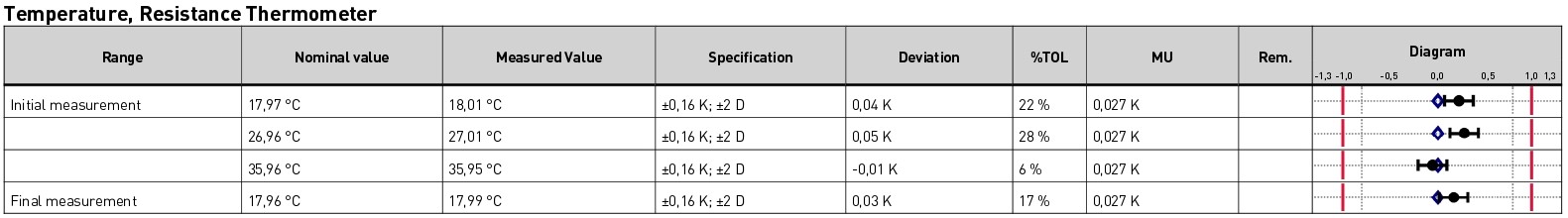

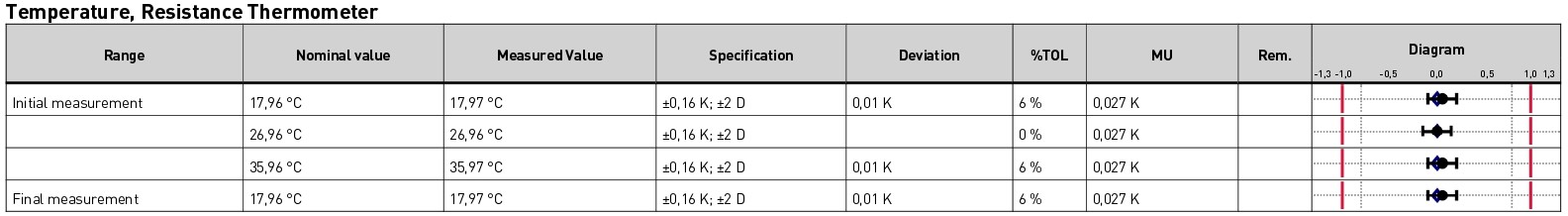

Here are examples of a temperature calibration on a precision measuring instrument, the PTH450.

The laboratory performed the calibration at three points (17.96°C, 26.96°C and 35.96°C). The last measurement (17.96°C) is used to evaluate the hysteresis, which is defined as the impact of past measurements on the current measurement.

The technical characteristics of the instrument, announced by the manufacturer (Dracal Technologies), are a measurement uncertainty of ± 0.2 °C over the full measurement range (from -40 °C to 125 °C), with an increased precision of ± 0.1 °C between 20 °C and 60 °C.

Here, the instrument complies with the manufacturer's specifications. The measured values and uncertainty during calibration are within the 95% confidence interval.

The adjustment was not required, but for demonstration purposes, it was performed. Here are the results of the second calibration, where it can be seen that the measured value is even closer to the nominal value at all three points.

Calibration in common use

When you are looking for a measuring instrument whose measurement you can correct, you are usually looking for an instrument that is "calibratable". The International Vocabulary of Metrology is therefore not in common use.

Indeed, the action of comparing without correcting may seem useless when the goal is to use the precision measuring instrument. The instrument shall render the desired value, which is the value as close as possible to reality, with the precision that the manufacturer has announced. If the calibration shows a significant difference between the measured value and the nominal value, and the instrument is adjustable, the adjustment will generally be made.

Common usage therefore blends the verification step and the correction step into a single action called "calibration".

Usage at Dracal Technologies

Maybe you know it, but Dracal Technologies offers measuring instruments with a "calibratable" option (-CAL option). In this case, you can do the calibration and adjustment yourself with DracalView or the command line tools. You can also send the instrument to the laboratory of your choice for calibration and adjustment.

When we talk about calibration at Dracal, we use the common usage. For us, it is the entire process of comparing with a more accurate instrument and correcting the values rendered by the Dracal instrument. Our three-point calibration function allows for this correction in the most accurate way possible. For more details on our 3-point calibration mechanism, see the following documentation.

References

BIPM, IEC, IFCC, ILAC, ISO, IUPAC, IUPAP, and OIML. International vocabulary of metrology | Basic and general concepts and associated terms (VIM). Joint Committee for Guides in Metrology, JCGM 200:2012. (3rd edition). URL: https://www.bipm.org/documents/20126/2071204/JCGM_200_2012.pdf/f0e1ad45-d337-bbeb-53a6-15fe649d0ff1.